Changelog¶

Version 2.0.2 (2025-10-14)¶

Support Python 3.14

Provide

manylinux_2_35wheels instead ofmanylinux_2_31(because GitHub Actions dropped Ubuntu 20.04 runners)

Versions 2.0.0 and 2.0.1 (2024-12-06)¶

Support Python 3.12 and 3.13

Drop support for macOS 11 (not supported by GitHub Actions anymore)

Drop support for macOS 12 (not supported by GitHub Actions anymore)

Fix the

universal2wheels for macOS to actually run on M1 Macs- Major Implement single-peaked criteria:

on the command-line,

lincs generate classification-problemhas a new--allow-single-peaked-criteriaoptionin the Problem file format, there is a new value

single-peakedforpreference_direction``in the Model file format, there is a new possible value for

accepted_values.kind:intervals, used withintervals: [[20, 80], [40, 60]]- in the Python API:

there is a new value in

Criterion.PreferenceDirection:single_peaked, typically used withlc.generate_problem(..., allowed_preference_directions=[..., lc.Criterion.PreferenceDirection.single_peaked])there is a new value in

AcceptedValues.Kind:intervalssome strategies for

LearnMrsortByWeightsProfilesBreedmust explicitly opt-in to support single-peaked criteria by calling their base class constructor withsupports_single_peaked_criteria=TrueBreaking Adapt parts of the Python API to support single-peaked criteria

all the learning approaches work

the human-readable output of

lincs describehas changed slightly to accommodate for single-peaked criterialincs visualizefails when called with single-peaked criteria. See this discussiondocumented in our “Single-peaked criteria” guide

- Breaking Let some thresholds be unreachable (Fix bug found in real-life ASA data)

in the Model file format, the last items in a

thresholdsorintervalslist can benullin the Python API, the last items in a

thresholdsorintervalslist can beNone(there is a runtime check that all are

nullafter the firstnull)

Breaking Split

LearnMrsortByWeightsProfilesBreed.LearningDataintoPreprocessedLearningSetandLearnMrsortByWeightsProfilesBreed.ModelsBeingLearnedBreaking Rename

LearningData.urbgstoModelsBeingLearned.random_generatorsChanges behavior slightly Optimize WPB model weights before evaluating and returning them

Breaking Add parameter

weights_optimization_strategyto theReinitializeLeastAccuratebreeding strategy (required to fix previous point)Changes behavior slightly Always keep the best model during WPB

Changes behavior slightly Upgrade to OR-Tools 9.11 and CUDA 12.4

Add method

.check_consistency_with(problem: Problem)toModelandAlternativesAdd methods

ModelsBeingLearned.get_modeland.recompute_accuracy

Version 1.1.0 (2024-02-09)¶

Publish the Python API¶

This release establishes the second tier of the stable API: the Python interface.

Introduction: our “Python API” guide, downloadable as a Jupyter Notebook

Reference: in our complete reference documentation

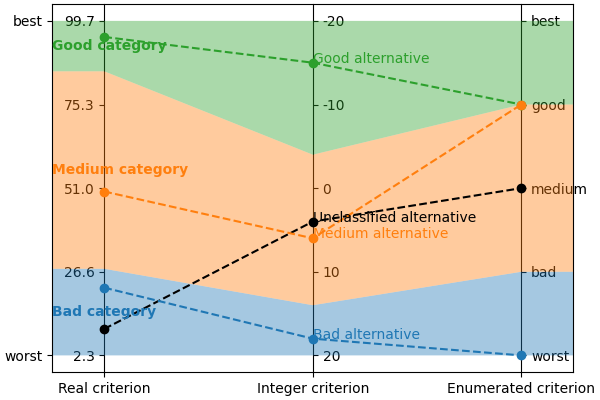

Improve lincs visualize¶

Replace legend by colored text annotations

Support visualizing single-criterion models

Add one graduated vertical axis per criterion

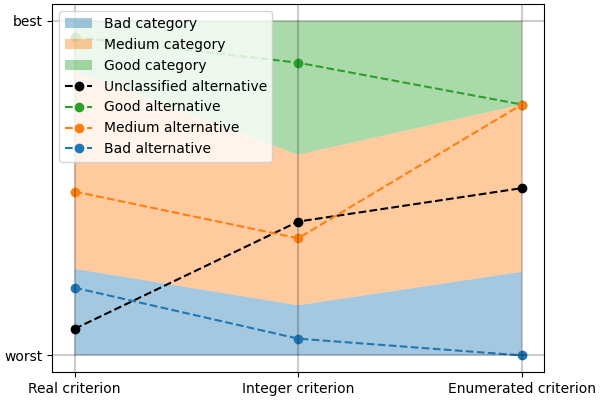

See the following “before” and “after” images:

Smaller changes¶

Breaking: Drop support for Python 3.7

Support discrete criteria (with enumerated or integer values)

Homogenize post-processing: this changes the numerical values of the thresholds learned by SAT-based approaches, but not their achieved accuracy

Improve validation of input data (e.g. check consistency between problem, model, and alternatives)

Build a Docker image on release (not published since 0.5.0), documented in our “Get started” guide

Support

copy.deepcopyon I/O objectsSupport pickling of I/O objects

Fix

TerminateAfterIterationsandTerminateAfterIterationsWithoutProgressstrategies: they were allowing slightly too many iterationsExpose parameters of EvalMaxSAT in our API and command-line interface (see

lincs learn classification-model --help):--ucncs.max-sat-by-separation.solver(for consistency, always"eval-max-sat"for now)--ucncs.max-sat-by-separation.eval-max-sat.nb-minimize-threads--ucncs.max-sat-by-separation.eval-max-sat.timeout-fast-minimize--ucncs.max-sat-by-separation.eval-max-sat.coef-minimize-time--ucncs.max-sat-by-coalitions.solver(for consistency, always"eval-max-sat"for now)--ucncs.max-sat-by-coalitions.eval-max-sat.nb-minimize-threads--ucncs.max-sat-by-coalitions.eval-max-sat.timeout-fast-minimize--ucncs.max-sat-by-coalitions.eval-max-sat.coef-minimize-time

Version 1.0.0 (2023-11-22)¶

This is the first stable release of lincs. It establishes the first tier of the stable API: the command-line interface.

Add a roadmap in the documentation

Version 0.11.1¶

This is the third release candidate for version 1.0.0.

Technical refactoring

Version 0.11.0¶

This is the second release candidate for version 1.0.0.

Breaking Rename

category_correlationtopreference_directionin problem filesBreaking Rename the

growingpreference direction toincreasingin problem filesBreaking Rename the

categoriesattribute in problem files toordered_categoriesin problem filesMake names of generated categories more explicit (“Worst category”, “Intermediate category N”, “Best category”)

Support

isotone(resp.antitone) as a synonym forincreasing(resp.decreasing) in problem filesAdd

lincs describecommand to produce human-readable descriptions of problems and modelsRemove comments about termination conditions from learned models, but:

Add

--mrsort.weights-profiles-breed.output-metadatato generate in YAML the data previously found in those commentsProvide a Jupyter notebook to help follow the “Get Started” guide (and use Jupyter for all integration tests)

Document the “externally managed” error on Ubuntu 23.4+

(In versions below, the term “category correlation” was used instead of “preference direction”.)

Versions 0.10.0 to 0.10.3¶

This is the first release candidate for version 1.0.0.

Breaking: Allow more flexible description of accepted values in the model json schema. See user guide for details.

Breaking: Rename option

--ucncs.approachto--ucncs.strategyBreaking: Rename option

--output-classified-alternativesto--output-alternativesFix line ends on Windows

Fix

lincs visualizeto use criteria’s min/max values and category correlationValidate consistency with problem when loading alternatives or model files

Output “reproduction command” in

lincs classifyImprove documentation

Versions 0.9.0 to 0.9.2¶

Pre-process the learning set before all learning algorithms.

Possible values for each criterion are listed and sorted before the actual learning starts so that learning algorithms now see all criteria as:

having increasing correlation with the categories

having values in a range of integers

This is a simplification for implementers of learning algorithms, and improves the performance of the weights-profiles-breed approach.

Expose

SufficientCoalitions::upset_rootsto PythonFix alternative names when using the

--max-imbalanceoption oflincs generate classified-alternativesProduce cleaner error when

--max-imbalanceis too tightPrint number of iterations at the end of WPB learnings

Display lincs’ version in the “Reproduction command” comment in generated files

Various improvements to the code’s readability

Version 0.8.7¶

Integrate CUDA parts on Windows

Compile with OpenMP on Windows

Versions 0.8.5 to 0.8.6¶

Distribute binary wheels for Windows!

Versions 0.8.0 to 0.8.4¶

Rename option

--...max-duration-secondsto--...max-durationDisplay termination condition after learning using the

weights-profiles-breedapproachMake termination of the

weights-profiles-breedapproach more consistentIntegrate Chrones (as an optional dependency, on Linux only)

Display iterations in

--...verbosemodeFix pernicious memory bug

Version 0.7.0¶

Bugfixes:

Fix the Linux wheels: make sure they are built with GPU support

Improve building lincs without

nvcc(e.g. on macOS):provide the

lincs info has-gpucommandadapt

lincs learn classification-model --help

Features:

Add “max-SAT by coalitions” and “max-SAT by separation” learning approaches (hopefully correct this time!)

Use YAML anchors and aliases to limit repetitions in the model file format when describing \(U^c \textsf{-} NCS\) models

Specifying the minimum and maximum values for each criterion in the problem file:

Generate synthetic data using these attributes (

--denormalized-min-max)Adapt the learning algorithms to use these attributes

Support criteria with decreasing correlation with the categories:

in the problem file

when generating synthetic data (

--allow-decreasing-criteria)in the learning algorithms

Add a comment to all generated files stating the command-line to use to re-generate them

Use enough decimals when storing floating point values in models to avoid any loss of precision

Log final accuracy with

--mrsort.weights-profiles-breed.verboseImprove tests

Version 0.6.0¶

Remove buggy “max-SAT by coalitions” approach

Add “SAT by separation” approach

Version 0.5.1¶

Publish wheels for macOS

Version 0.5.0¶

Implement “SAT by coalitions” and “max-SAT by coalitions” removed in 0.6.0 learning methods

Add misclassify_alternatives to synthesize noise on alternatives

Expend the model file format to support specifying the sufficient coalitions by their roots

Produce “manylinux_2_31” binary wheels

Improve YAML schemas for problem and model file formats

Use the “flow” YAML formatting for arrays of scalars

Improve consistency between Python and C++ APIs (not yet documented though)

Add more control over the “weights, profiles, breed” learning method (termination strategies, “verbose” option)

Add an expansion point for the breeding part of “weights, profiles, breed”

Add an exception for failed learnings

Version 0.4.5¶

Use JSON schemas to document and validate the problem and model files

Support development on macOS and on machines without a GPU

Improve documentation

Versions 0.4.1 to 0.4.4¶

Never properly published

Version 0.4.0¶

Add a GPU (CUDA) implementation of the accuracy heuristic strategy for the “weights, profiles, breed” learning method

Introduce Alglib as a LP solver for the “weights, profiles, breed” learning method

Publish a Docker image with lincs installed

Change “domain” to “problem” everywhere

Improve documentation

Improve model and alternatives visualization

Expose ‘Alternative::category’ properly

Versions 0.3.4 to 0.3.7¶

Improve documentation

Version 0.3.3¶

Fix Python package description

Version 0.3.2¶

License (LGPLv3)

Version 0.3.1¶

Fix installation (missing C++ header file)

Version 0.3.0¶

Implement learning an MR-Sort model using Sobrie’s heuristic on CPU

Version 0.2.2¶

Add options: generate model –mrsort.fixed-weights-sum and generate classified-alternatives –max-imbalance

Version 0.2.1¶

Fix images on the PyPI website

Version 0.2.0¶

Implement generation of pseudo-random synthetic data

Implement classification by MR-Sort models

Kick-off the documentation effort with a quite nice first iteration of the README

Version 0.1.3¶

Initial publication with little functionality